Guide To Prompt Engineering

Hey, Gregor here 👋 This is a paid edition of the Engineering Leadership newsletter. Every week, I share 2 articles → Wednesday’s paid edition and Sunday’s free edition, with a goal to make you a great engineering leader! Consider upgrading your account for the full experience here. Guide to Prompt EngineeringAn in-depth guide for creating optimized prompts from a Product Lead at OpenAIIntroPrompt Engineering is becoming more and more an increasingly important skill for any engineer or engineering leader. When you use it the right way, it can be an incredibly helpful tool to make your work easier. In this article, you’ll learn in-depth about what Prompt Engineering is and how to think about refining the prompts to optimize the outputs. You’ll learn about 3 prompting techniques, 4 prompting strategies and various frameworks. You’ll also get 20+ examples of prompts, which you can adjust to work in your specific case. To ensure that we’ll get the best insights, I am happy to team up with Miqdad Jaffer, Product Lead at OpenAI. This is an article for paid subscribers, and here is the full index: - 1. What Is Prompt Engineering? Introducing Miqdad JafferMiqdad Jaffer is a Product Lead at OpenAI. Before that, he was a Director of Product at Shopify, where he led a group responsible for the inclusion of AI across Shopify. Miqdad is teaching a popular course called AI Product Management Certification. I’ve taken a close look at the course and learned a lot from it. I highly recommend it for any engineer/engineering leader (not just PMs) to get a better understanding of how to use AI to build great products. Check the course and use my code GREGOR25 for $500 off. The next cohort starts on July 13. 1. What Is Prompt Engineering?

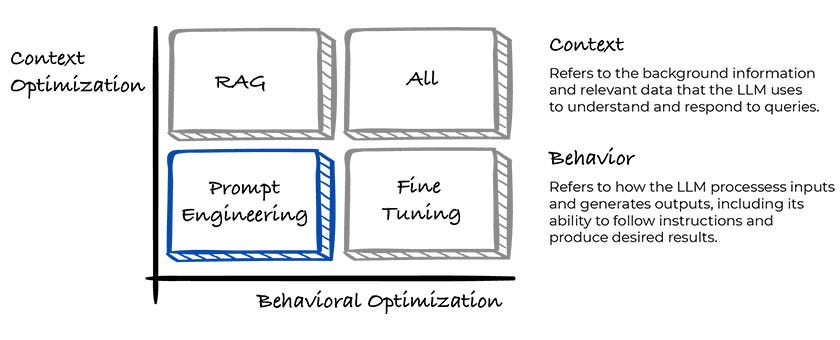

It involves understanding how to structure prompts to activate the model's knowledge based on its training data. The goal is to optimize the model's ability to follow instructions and produce accurate and relevant responses. Always Start With Prompt Engineering FirstThere are 4 main techniques for getting high-quality output from LLMs:

And you should always start with prompt engineering. Why is that?

Prompt Engineering will get you the biggest ROI of your time and value + is necessary for every LLM-based implementation. There is no such thing as just doing RAG or just doing fine tunning. You have to start with prompt engineering and then layer on other approaches if required. There are a number of techniques, strategies and frameworks for prompting

The techniques represent the fundamental methods for creating LLMs, ranging from simple zero-shot approaches to more complex chain-of-thought methodologies.

The strategies we’ll cover provide proven tactics for improving prompt effectiveness, focusing specifically on how to structure and communicate your instructions to the LLM.

The frameworks we'll explore are established methodologies that combine these techniques and strategies into repeatable processes that you can apply consistently across different use cases.

Let’s get more into the 3 prompting techniques. 2. Prompting TechniquesLet's examine the three primary prompting techniques and understand when to use each one. These are the three most commonly used prompting techniques: 2.1 Zero-shot PromptingThis is what most of us use today. Here, you provide the LLM with an input query without any examples. It's best for straightforward, low complexity tasks like summarizing a short paragraph, where the instruction is clear and direct. Think of this as the way that you interact with ChatGPT today. You might say something along the lines of: Summarize this paragraph for me. Write a poem about this. Create content for this. Write code based on this single instruction. Example prompt: 2.2 Few-shot PromptingThis involves providing a few examples to guide the model's output. Imagine teaching someone how to classify weather. You might say sunny and warm, referring to beach weather and cloudy and cool to sweater weather. After these examples, the LLM will know that rainy and cold is indoor weather. That's how a few-shot prompting works. You show the pattern you want through examples and and the model follows it. Example prompt: 2.3 Chain-of-Thought PromptingIs all about breaking tasks down into logical sequential steps. This is ideal for complex tasks requiring step-by-step reasoning, such as explaining how a bill becomes a law. It helps the model and often the user follow the logical progression of the solution. The key here is to map the technique to your task complexity and your desired output format. Example prompt: 3. Prompting Strategies...Subscribe to Engineering Leadership to unlock the rest.Become a paying subscriber of Engineering Leadership to get access to this post and other subscriber-only content. A subscription gets you:

|