Comma V01 1t And 2t 7b Llms Trained On Openly Licensed Text

Comma v0.1 1T and 2T - 7B LLMs trained on openly licensed textPlus o3-pro, Mistral's Magistral, 80% cheaper o3In this newsletter:

Plus 6 links and 3 quotations and 1 note Comma v0.1 1T and 2T - 7B LLMs trained on openly licensed text - 2025-06-07It's been a long time coming, but we finally have some promising LLMs to try out which are trained entirely on openly licensed text! EleutherAI released the Pile four and a half years ago: "an 800GB dataset of diverse text for language modeling". It's been used as the basis for many LLMs since then, but much of the data in it came from Common Crawl - a crawl of the public web which mostly ignored the licenses of the data it was collecting. The Common Pile v0.1 is EleutherAI's successor to the original Pile, in collaboration with a large group of other organizations with whom they have been "meticulously curating a 8 TB corpus of openly licensed and public domain text for training large language models". The dataset is exciting, but on top of that they've released two new LLMs that have been trained on it: Comma v0.1 1T and 2T, both with 7 billion parameters, the first trained on 1 trillion tokens and the second on 2 trillion tokens. These are available on Hugging Face as common-pile/comma-v0.1-1t and common-pile/comma-v0.1-2t. EleutherAI claim that these new models perform "comparably to leading models trained in the same regime on unlicensed data". I decided to try them out myself. The models are currently only available as MLX is still a very new format, but Claude 4 Sonnet has a training cutoff date of March 2025 so I crossed my fingers and hoped it would be able to help me out. It did exactly that! I ran the following command to convert the 2T model to run using MLX: I uploaded the converted model to Hugging Face as simonw/comma-v0.1-2t-mlx. Now that it's on the Hub here's how to try it out (using The first time you run this it will download 13GB of files to Here's what I got back:

The big limitation of this model right now is that it's a raw base model - it hasn't been instruction-tuned or set up for chat. This means you have to prefix-prompt it, like in the GPT-3 days. You need to give it a sentence for it to complete. This makes it a lot harder to evaluate than the instruction-tuned models that I've become used to over the past few years! I'm hoping someone releases a chat-tuned version of this model soon. The challenge there will be keeping to the openly licensed training data, since most of the fine-tuning datasets out there for this are themselves derived from models that were trained on unlicensed data. Sadly it didn't do too well on my pelican on a bicycle benchmark: The output started like this and looped indefinitely:

Quote 2025-06-07

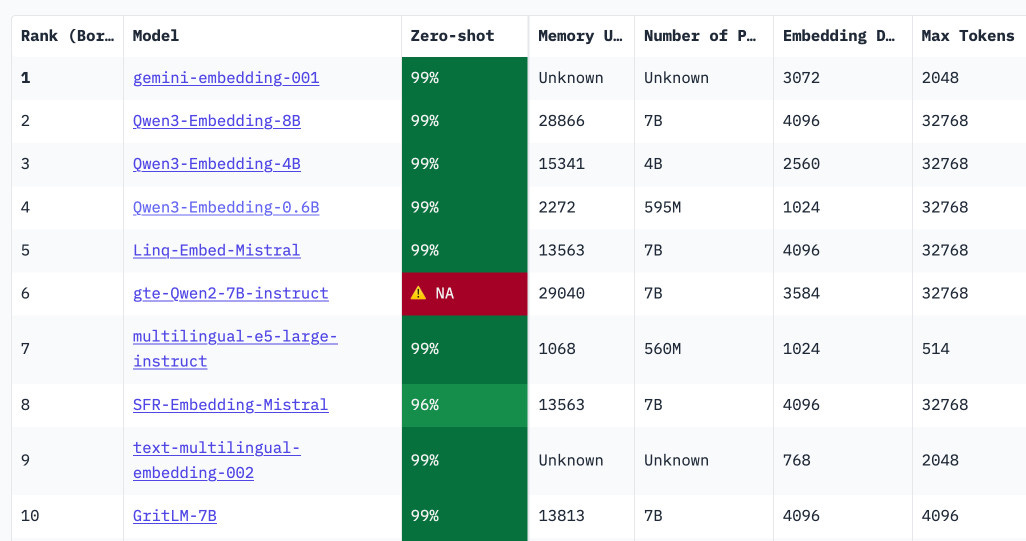

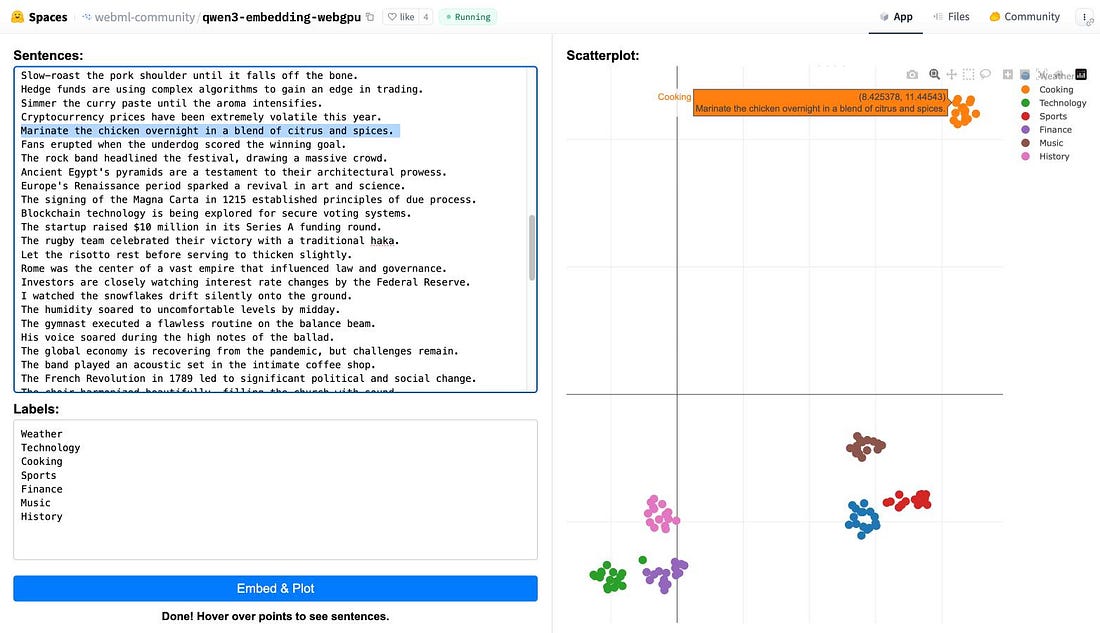

Link 2025-06-08 Qwen3 Embedding: New family of embedding models from Qwen, in three sizes: 0.6B, 4B, 8B - and two categories: Text Embedding and Text Reranking. The full collection can be browsed on Hugging Face. The smallest available model is the 0.6B Q8 one, which is available as a 639MB GGUF. I tried it out using my llm-sentence-transformers plugin like this: This output 1024, confirming that Qwen3 0.6B produces 1024 length embedding vectors. These new models are the highest scoring open-weight models on the well regarded MTEB leaderboard - they're licensed Apache 2.0. You can also try them out in your web browser, thanks to a Transformers.js port of the models. I loaded this page in Chrome (source code here) and it fetched 560MB of model files and gave me an interactive interface for visualizing clusters of embeddings like this: Quote 2025-06-09

Link 2025-06-09 OpenAI hits $10 billion in annual recurring revenue fueled by ChatGPT growth: Noteworthy because OpenAI revenue is a useful indicator of the direction of the generative AI industry in general, and frequently comes up in conversations about the sustainability of the current bubble.

So these new numbers represent nearly double the ARR figures for last year. Link 2025-06-09 WWDC: Apple supercharges its tools and technologies for developers: Here's the Apple press release for today's WWDC announcements. Two things that stood out to me:

Here's new documentation on Generating content and performing tasks with Foundation Models - the Swift code looks like this: There's also a 23 minute Meet the Foundation Models framework video from the conference, which clarifies that this is a 3 billion parameter model with 2 bit quantization. The model is trained for both tool-calling and structured output, which they call "guided generation" and describe as taking advantage of constrained decoding. I'm also very excited about this:

I continue to seek the ideal sandboxing solution for running untrusted code - both from other humans and written for me by LLMs - on my own machines. This looks like it could be a really great option for that going forward. It looks like apple/container on GitHub is part of this new feature. From the technical overview:

Link 2025-06-10 Magistral — the first reasoning model by Mistral AI: Mistral's first reasoning model is out today, in two sizes. There's a 24B Apache 2 licensed open-weights model called Magistral Small (actually Magistral-Small-2506), and a larger API-only model called Magistral Medium. Magistral Small is available as mistralai/Magistral-Small-2506 on Hugging Face. From that model card:

Mistral also released an official GGUF version, Magistral-Small-2506_gguf, which I ran successfully using Ollama like this: That fetched a 25GB file. I ran prompts using a chat session with llm-ollama like this: Here's what I got for "Generate an SVG of a pelican riding a bicycle" (transcript here): It's disappointing that the GGUF doesn't support function calling yet - hopefully a community variant can add that, it's one of the best ways I know of to unlock the potential of these reasoning models. I just noticed that Ollama have their own Magistral model too, which can be accessed using: That gets you a 14GB One thing that caught my eye in the Magistral announcement:

I guess this means the reasoning traces are fully visible and not redacted in any way - interesting to see Mistral trying to turn that into a feature that's attractive to the business clients they are most interested in appealing to. Also from that announcement:

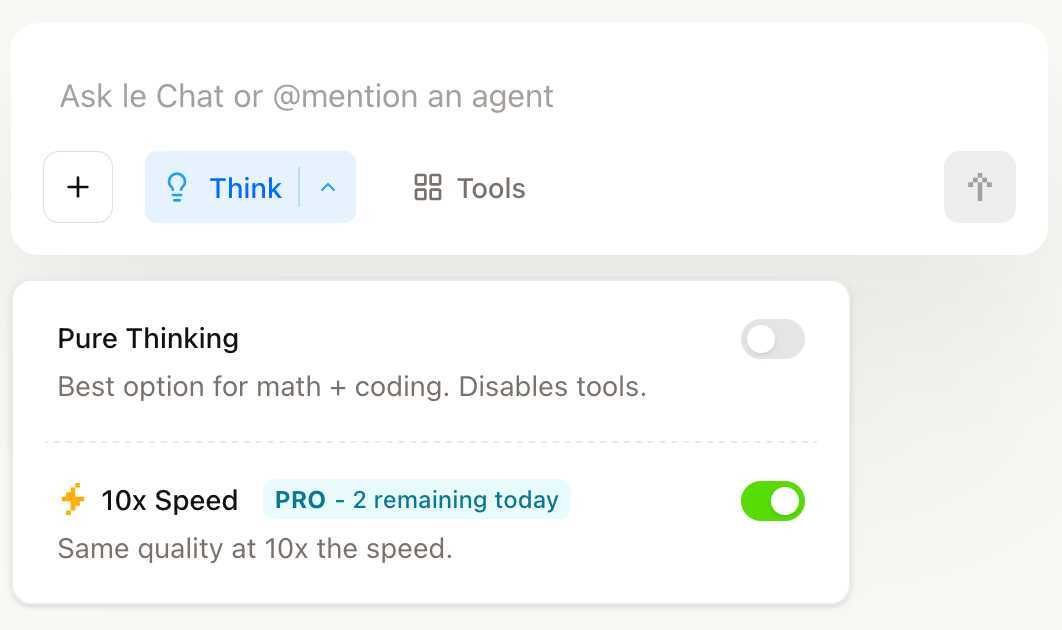

I haven't seen a reasoning model promoted for creative writing in this way before. You can try out Magistral Medium by selecting the new "Thinking" option in Mistral's Le Chat. They have options for "Pure Thinking" and a separate option for "10x speed", which runs Magistral Medium at 10x the speed using Cerebras. The new models are also available through the Mistral API. You can access them by installing llm-mistral and running Here's that transcript. At 13 input and 1,236 output tokens that cost me 0.62 cents - just over half a cent. Note 2025-06-10 OpenAI just dropped the price of their o3 model by 80% - from $10/million input tokens and $40/million output tokens to just $2/million and $8/million for the very same model. This is in advance of the release of o3-pro which apparently is coming later today (update: here it is). This is a pretty huge shake-up in LLM pricing. o3 is now priced the same as GPT 4.1, and slightly less than GPT-4o ($2.50/$10). It’s also less than Anthropic’s Claude Sonnet 4 ($3/$15) and Opus 4 ($15/$75) and sits in between Google’s Gemini 2.5 Pro for >200,00 tokens ($2.50/$15) and 2.5 Pro for <200,000 ($1.25/$10). I’ve updated my llm-prices.com pricing calculator with the new rate. How have they dropped the price so much? OpenAI's Adam Groth credits ongoing optimization work:

Link 2025-06-10 o3-pro: OpenAI released o3-pro today, which they describe as a "version of o3 with more compute for better responses". It's only available via the newer Responses API. I've added it to my llm-openai-plugin plugin which uses that new API, so you can try it out like this: It's slow - generating this pelican took 124 seconds! OpenAI suggest using their background mode for o3 prompts, which I haven't tried myself yet. o3-pro is priced at $20/million input tokens and $80/million output tokens - 10x the price of regular o3 after its 80% price drop this morning. Ben Hylak had early access and published his notes so far in God is hungry for Context: First thoughts on o3 pro. It sounds like this model needs to be applied very thoughtfully. It comparison to o3:

It sounds to me like o3-pro works best when combined with tools. I don't have tool support in Link 2025-06-10 AI-assisted coding for teams that can't get away with vibes: This excellent piece by Atharva Raykar offers a bunch of astute observations on AI-assisted development that I haven't seen written down elsewhere.

Atharva notes that AI is a multiplier: the more expertise you have in software engineering, the better the results you can get from LLMs. Furthermore, what helps the human helps the AI. This means food test coverage, automatic linting, continuous integration and deployment, good documentation practices and "clearly defined features, broken down into multiple small story cards". If a team has all of this stuff in place, AI coding assistants will be able to operate more reliably and collaborate more effectively with their human overseers. I enjoyed his closing thoughts about how heavier reliance on LLMs change our craft:

Quote 2025-06-10

Simon Willison’s Newsletter is free today. But if you enjoyed this post, you can tell Simon Willison’s Newsletter that their writing is valuable by pledging a future subscription. You won't be charged unless they enable payments. |