How Code Reviews Are Changing With Ai

Hey, Luca here, welcome to a new edition of Refactoring! Every week you get:

Here are the latest editions you may have missed: To access all our articles, library, and community, subscribe to the paid version: How Code Reviews are Changing with AI 🔍Reflections and predictions on the future of code reviews, taking inspiration from the CodeRabbit success.There is no shortage of articles online about the future of software development and AI. I am guilty of that too 🙋♂️ Most articles, however, only focus on AI coding. They ponder about a hypothetical future where AI may or may not write all of our code, engineers become AI managers, or they keep coding but do so 100x faster. The tools themselves, when you look at the big names — Cursor, Windsurf (just insanely acquired by OpenAI), Lovable, etc — seem to be all about coding. Or vibe-coding. Now, if you ask me, that future might materialize one day, but that day is not today. Vibe-coding only goes so far, and both my own experiments (will write more in an upcoming article!) and those of my friends are falling kind of short of our expectations. But coding is only a part of the development process, and it turns out there are other parts where, perhaps more quietly, AI is making a real dent, and it feels like the future is already here. One of these is code reviews. While in other AI verticals you can usually point to groups of similar apps with similar traction and adoption, when it comes to AI-powered code reviews, there is a single tool that is absolutely dominating: CodeRabbit. CodeRabbit is the most installed AI app both on Github and GitLab. It is used in 1M+ repositories, and trusted by some of the most picky customers you can find, like the Linux Foundation, MIT, and Mercury. So last week I got in touch with Harjot Gill and the CodeRabbit team, and we talked at length about the future of code reviews and the impact of AI in the dev process. Here is the agenda for today:

Let’s dive in! Disclaimer: I am a fan of what Harjot and the team are building at CodeRabbit, and I am grateful they partnered on this piece. However, I will only write my unbiased opinion about the practices and tools covered here, including CodeRabbit. 🏆 What is the goal of code reviews in 2025?As you may know if you have been reading Refactoring for some time, code reviews are one of my small obsessions. Code reviews look, to me, like an imperfect solution to two supremely important problems:

These are also self-reinforcing goals: sharing knowledge helps keep quality high, and, in turn, high quality code is generally crisper and easier to understand for others. How do code reviews address these goals? Typically, by making code changes inspected by more than one engineer — at least two: the author, and one+ reviewers. To be clear, this not only looks fine to me: it looks like the only possible way. Still, even if we agree on the above, there is plenty left to figure out, like:

These questions matter a lot, and can make all the difference between an effective, successful review workflow, and a disfunctional, time-wasting one. So, let’s talk about workflows. 🔄 Code review workflowsMany teams today answer these questions with a pretty dogmatic procedure, that looks more or less like this:

The first glaring problem with this strategy is that it continuously breaks developers’ flow:

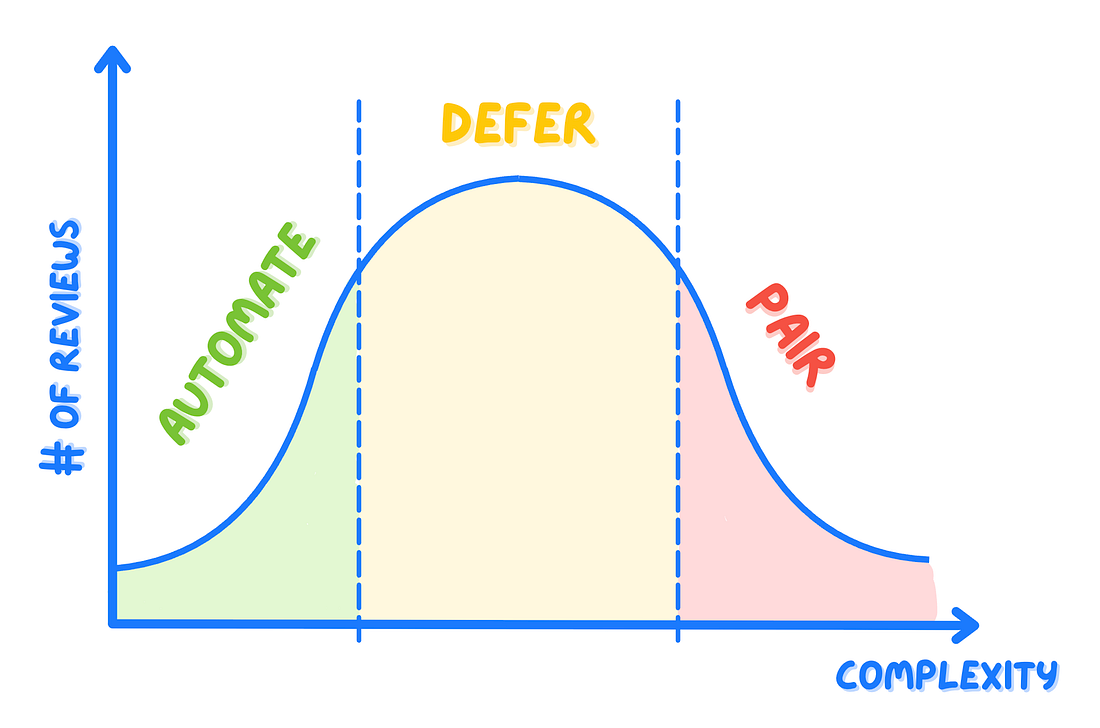

The second problem is the one-size-fits-all approach. Should all reviews be async? Or blocking? Or mandatory? If the goal of reviews is 1) improving quality and 2) sharing knowledge, are we sure all code changes need both? Or even one of the two? On one side, teams produce a swath of small and inconspicuous changes, for which the blocking factor is questionable. On the other side, for big and ambitious PRs, it is unclear how much nuance async + low-context reviews can really capture. So, last year we discussed a more flexible approach, with the Automate / Defer / Pair framework. Let's quickly recap: 1) 🤖 AutomateFor low-risk changes with no remarkable knowledge to share (e.g., small bug fixes, dependency updates, simple refactors), rely heavily on automated checks like linters, static analysis, and comprehensive tests. Human review can be minimal or even skipped. 2) ↪️ DeferFor most changes, especially in a continuous delivery environment, consider merging first and reviewing after the merge. This works especially well when changes are released behind feature flags. The primary goals of knowledge sharing and quality improvement can still be achieved asynchronously, without blocking delivery. Feedback is gathered post-merge and improvements are addressed in follow-up changes. 3) 🤝 PairFor complex, high-risk, or critical changes, consider synchronous collaboration, like pair programming or a dedicated pairing review session. These are 10x more effective (and faster) than async back-and-forth, and allow for better discussion and deeper knowledge transfer. This framework isn't about eliminating human oversight but making it more targeted and impactful. We want to reduce bottlenecks while retaining (or even improving) quality and learning. Now, how does AI fit into this picture? 🪄 How AI changes reviewsThe reason why vibe-coding is still fuzzy and controversial, while AI code reviews are spreading like wildfire, is that the latter are immediately useful, and don’t require teams to radically change the way they work. In my experience, here are the main benefits of AI reviews:

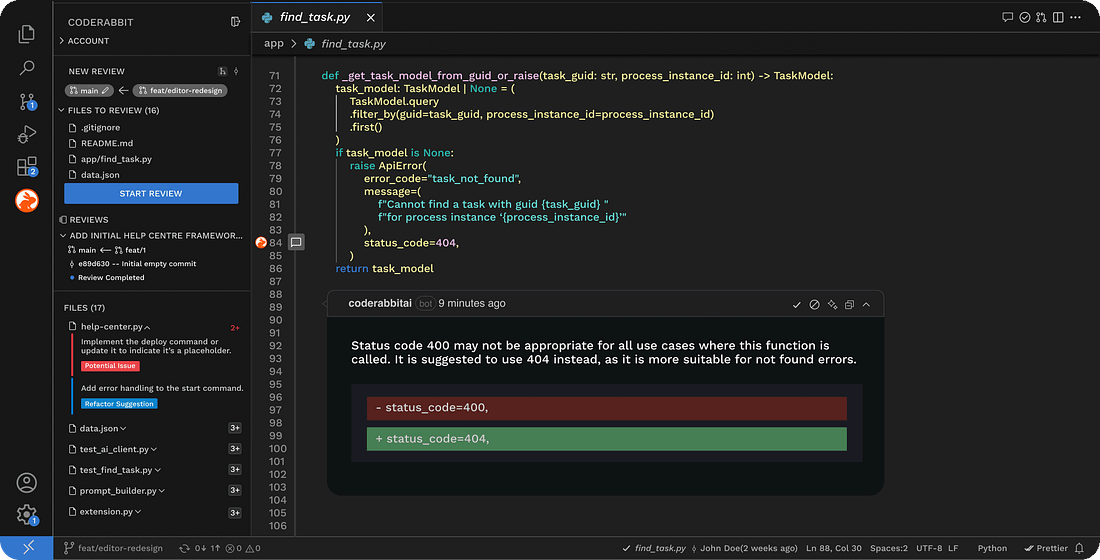

Let’s look at each of them: 1) 🗑️ Reduce toilAI significantly expands what can be automated, especially drawing from stuff that human reviewers don’t like to check. Because let’s be honest, a lot of reviewing is busy work, and AI makes it easier:

All together, this helps to 👇 2) ✨ Increase enjoymentThe whole point of reducing toil is to use human time to address higher-value topics that are uniquely human (for now 🙈). It turns out, this is also the stuff that people enjoy the most:

3) 📉 Reduce riskNot to brag, but the defer part of our framework above becomes much more viable with AI. If an AI agent performs a thorough first pass, the confidence to merge first and conduct a human review later increases significantly, especially for medium-to-small changes. In particular:

This is especially true as AI reviews allow to further shift-left QA, by doing it straight in the IDE 👇 4) 🎓 Democratize expertiseAI can act as a basic expert-in-a-box, bringing specialized knowledge to every review. Think of hard to access topics like security, performance, or accessibility. For example, even if your team doesn’t have a dedicated security expert reviewing every PR, AI can flag common vulnerabilities. Same goes for potential performance bottlenecks, or subtle accessibility issues. This doesn’t reduce the need for experts in these domains — it reduces the amount of time they need to spend on routine busy-work, so they can work at a higher level of abstraction on strategy and platform. 🔮 Future scenariosAs part of our chat, we further brainstormed about future scenarios for AI coding and what may come in the near future. Here are the best ideas I believe in: 1) 🌊 AI needs horizontal adoption to avoid bottlenecksThe development process is a pipeline that includes several steps. If we only increase the throughput of some parts thanks to AI, we are going to create bottlenecks elsewhere. So, to reap the benefits of AI allowing us to write more code, we need to speed up also code reviews, testing, and documentation, otherwise we will produce more toil for humans in some of these steps. Since AI IDEs like Cursor are commonplace, AI code reviews are not a nice-to-have anymore — they are essential to avoid drowning in PRs (or accepting a lower quality output). 2) 🔍 Code reasoning becomes more valuable than code generationSpeaking of reviews, I feel that it is plausible — and even likely — that AI becomes more proficient at reviewing code (identifying errors, suggesting improvements, checking conventions) before it becomes truly autonomous and reliable at writing large, complex, novel systems from scratch. This might seem counterintuitive — if AI writes unreliable code and we need humans to guide it, how can it review code well? Because reviewing is, in many ways, a more constrained problem: you're evaluating existing code against known patterns, and when a lot of uncertainty is gone. Instead, generating novel, high-quality code in a complex domain is arguably harder. So we might see the bulk of code reviews being solved by AI even while humans are still heavily involved in the creative and design aspects of coding. Which would flip the current dynamic on its head, where coding assistance is widespread, while review automation is still catching up. 3) 🧑⚖️ Humans as guarantors of taste and business alignmentAs AI takes on more of the how (implementation details), human engineers increasingly focus on the what and, even more, on the why. We've talked about Taste vs. Skills before — taste being, in engineering, the ability to discern things like quality, good design, and solutions that match business needs. For engineers, exercising their taste will mean asking questions like:

4) 🤖 Sometimes you need a leap of faith (automation)In most workflows, today, people are using AI to create drafts — drafts of code, drafts of code reviews, drafts of docs — and have humans do a final pass. This is nice, but it also corners people into doing a lot of context switching and micro tasks. I believe that sometimes, under controlled conditions, we should make a leap of faith and completely automate some tasks. We should switch from an “approve first” to a “possibly revert later” approach. So ask yourself: what are those things that AI can do that would be relatively inexpensive to roll back in case it does them wrong? Chances are it’s more than you think. 📌 Bottom lineAnd that's it for today! Here are the main takeaways:

See you next week! Sincerely I am a fan of what Harjot and the team are building at CodeRabbit, and I am grateful they partnered on this piece. If you want to learn more about what they are cooking, check out below |